Table Of Content:

- Amazon DOP-C01 Dumps Pdf

- Amazon DOP-C01 Dumps Youtube

- Amazon DOP-C01 Exam Practice Test

- Amazon Discount Code 2021

Share Amazon DOP-C01 exam practice questions and answers from leads4pass latest updated DOP-C01 dumps free of charge. Get the latest uploaded DOP-C01 dumps pdf from google driver online. To get the full Amazon DOP-C01 dumps PDF or dumps VCE visit: https://www.leads4pass.com/aws-devops-engineer-professional.html (Q&As: 449). all Amazon DOP-C01 exam questions have been updated, the answer has been corrected!

Make sure your exam questions are real and effective to help you pass your first exam!

[Amazon DOP-C01 Dumps pdf] Latest Amazon DOP-C01 Dumps PDF collected by leads4pass Google Drive:

https://drive.google.com/file/d/1CxJ0SUwQGHmG0XpVDqLLyo4gU07s9uYH/

[Amazon DOP-C01 Youtube] Amazon DOP-C01 exam questions and answers are shared free of charge from Youtube watching uploads from leads4pass.

Latest Update Amazon DOP-C01 Exam Practice Questions and Answers Online Test

QUESTION 1

What is required to achieve gigabit network throughput on EC2? You already selected cluster-compute, 10GB instances

with enhanced networking, and your workload is already network-bound, but you are not seeing 10-gigabit speeds.

A. Enable biplex networking on your servers, so packets are non-blocking in both directions and there\\’s no switching

overhead.

B. Ensure the instances are in different VPCs so you don\\’t saturate the Internet Gateway on any one VPC.

C. Select PIOPS for your drives and mount several, so you can provision sufficient disk throughput.

D. Use a placement group for your instances so the instances are physically near each other in the same Availability

Zone.

Correct Answer: D

You are not guaranteed 10gigabit performance, except within a placement group. A placement group is a logical

grouping of instances within a single Availability Zone. Using placement groups enables applications to participate in a

low-latency, 10 Gbps network. Placement groups are recommended for applications that benefit from low network

latency, high network throughput, or both. Reference: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/placement-groups.html

QUESTION 2

What is the maximum supported single-volume throughput on EBS?

A. 320MiB/s

B. 160MiB/s

C. 40MiB/s

D. 640MiB/s

Correct Answer: A

The ceiling throughput for PIOPS on EBS is 320MiB/s.

Reference: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumeTypes.html

QUESTION 3

A developer tested an application locally and then deployed it to AWS Lambda. While testing the application remotely,

the Lambda function fails with an access denied message.

How can this issue be addressed?

A. Update the Lambda function\\’s execution role to include the missing permissions.

B. Update the Lambda function\\’s resource policy to include the missing permissions.

C. Include an IAM policy document at the root of the deployment package and redeploy the Lambda function.

D. Redeploy the Lambda function using an account with access to the AdministratorAccess policy.

Correct Answer: A

Reference: https://aws.amazon.com/premiumsupport/knowledge-center/access-denied-lambda-s3-bucket/

QUESTION 4

A large enterprise is deploying a web application on AWS. The application runs on Amazon EC2 instances behind an

Application Load Balancer. The instances run in an Auto Scaling group across multiple Availability Zones. The

application stores data in an Amazon RDS Oracle DB instance and Amazon DynamoDB. There are separate

environments for development, testing, and production. What is the MOST secure and flexible way to obtain password

credentials during deployment?

A. Retrieve an access key from an AWS Systems Manager SecureString parameter to access AWS services. Retrieve

the database credentials from a Systems Manager SecureString parameter.

B. Launch the EC2 instances with an EC2 IAM role to access AWS services. Retrieve the database credentials from

AWS Secrets Manager.

C. Retrieve an access key from an AWS Systems Manager plaintext parameter to access AWS services. Retrieve the

database credentials from a Systems Manager SecureString parameter.

D. Launch the EC2 instances with an EC2 IAM role to access AWS services. Store the database passwords in an

encrypted config file with the application artifacts.

Correct Answer: D

QUESTION 5

A company using AWS CodeCommit for source control wants to automate its continuous integration and continuous

deployment pipeline on AWS in its development environment. The company has three requirements:

1.

There must be a legal and a security review of any code change to make sure sensitive information is not leaked

through the source code.

2.

Every change must go through unit testing.

3.

Every change must go through a suite of functional testing to ensure functionality. In addition, the company has the

following requirements for automation:

1.

Code changes should automatically trigger the CI/CD pipeline.

2.

Any failure in the pipeline should notify [email protected].

3.

There must be approved to stage the assets to Amazon S3 after tests have been performed.

What should a DevOps Engineer do to meet all of these requirements while following CI/CD best practices?

A. Commit to the development branch and trigger AWS CodePipeline from the development branch.

Make an individual stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use

Amazon CloudWatch metrics to detect changes in pipeline stages and Amazon SES for emailing [email protected].

B. Commit to mainline and trigger AWS CodePipeline from mainline. Make an individual stage in CodePipeline for

security review, unit tests, functional tests, and manual approval. Use AWS CloudTrail logs to detect changes in pipeline

stages and Amazon SNS for emailing [email protected].

C. Commit to the development branch and trigger AWS CodePipeline from the development branch. Make an individual

stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use Amazon CloudWatch

Events to detect changes in pipeline stages and Amazon SNS for emailing [email protected].

D. Commit to mainline and trigger AWS CodePipeline from mainline. Make an individual stage in CodePipeline for

security review, unit tests, functional tests, and manual approval. Use Amazon CloudWatch Events to detect changes in

pipeline stages and Amazon SES for emailing [email protected].

Correct Answer: C

QUESTION 6

A development team is using AWS CodeCommit to version control application code and AWS CodePipeline to

orchestrate software deployments. The team has decided to use a remote master branch as the trigger for the pipeline

to integrate code changes. A developer has pushed code changes to the CodeCommit repository but noticed that the

pipeline had no reaction, even after 10 minutes. Which of the following actions should be taken to troubleshoot this

issue?

A. Check that an Amazon CloudWatch Events rule has been created for the master branch to trigger the pipeline.

B. Check that the CodePipeline service role has permission to access the CodeCommit repository.

C. Check that the developer\\’s IAM role has permission to push to the CodeCommit repository.

D. Check to see if the pipeline failed to start because of CodeCommit errors in Amazon CloudWatch Logs.

Correct Answer: D

QUESTION 7

A DevOps Engineer encountered the following error when attempting to use an AWS CloudFormation

template to create an Amazon ECS cluster:

An error occurred (InsufficientCapabilitiesException) when calling the CreateStack operation.

What caused this error and what steps need to be taken to allow the Engineer to successfully execute the

AWS CloudFormation template?

A. The AWS user or role attempting to execute the CloudFormation template does not have the permissions required to

create the resources within the template. The Engineer must review the user policies and add any permissions needed

to create the resources and then rerun the template execution.

B. The AWS CloudFormation service cannot be reached and is not capable of creating the cluster. The Engineer needs

to confirm that routing and firewall rules are not preventing the AWS CloudFormation script from communicating with the

AWS service endpoints, and then rerun the template execution.

C. The CloudFormation execution was not granted the capability to create IAM resources. The Engineer needs to

provide CAPABILITY_IAM and CAPABILITY_NAMED_IAM as capabilities in the CloudFormation execution parameters

or provide the capabilities in the AWS Management Console.

D. CloudFormation is not capable of fulfilling the request of the specified resources in the current AWS Region. The engineer needs to specify a new region and rerun the template.

Correct Answer: C

Reference: https://github.com/awslabs/serverless-application-model/issues/51

QUESTION 8

A media customer has several thousand Amazon EC2 instances in an AWS account. The customer is

using a Slack channel for team communications and important updates. A DevOps Engineer was told to

send all AWS-scheduled maintenance notifications to the company Slack channel.

Which method should the Engineer use to implement this process in the LEAST amount of steps?

A. Integrate AWS Trusted Advisor with AWS Config. Based on the AWS Config rules created, the AWS Config event

can invoke an AWS Lambda function to send notifications to the Slack channel.

B. Integrate AWS Personal Health Dashboard with Amazon CloudWatch Events. Based on the CloudWatch Events

created, the event can invoke an AWS Lambda function to send notifications to the Slack channel.

C. Integrate EC2 events with Amazon CloudWatch monitoring. Based on the CloudWatch Alarm created, the alarm can

invoke an AWS Lambda function to send EC2 maintenance notifications to the Slack channel.

D. Integrate AWS Support with AWS CloudTrail. Based on the CloudTrail lookup event created, the event can invoke an

AWS Lambda function to pass EC2 maintenance notifications to the Slack channel.

Correct Answer: C

Reference: https://yabhinav.github.io/cloud/awslambda-slack-notifications/

QUESTION 9

An Engineering team manages a Node.js e-commerce application. The current environment consists of the following

components: Amazon S3 buckets for storing content Amazon EC2 for the front-end web servers AWS Lambda for

executing image processing Amazon DynamoDB for storing session-related data

The team expects a significant increase in traffic to the site. The application should handle the additional load without

interruption. The team ran initial tests by adding new servers to the EC2 front-end to handle the larger load, but the

instances took up to 20 minutes to become fully configured. The team wants to reduce this configuration time. What

changes will the Engineering team need to implement to make the solution the MOST resilient and highly available while

meeting the expected increase in demand?

A. Use AWS OpsWorks to automatically configure each new EC2 instance as it is launched. Configure the EC2

instances by using an Auto Scaling group behind an Application Load Balancer across multiple Availability Zones.

Implement Amazon DynamoDB Auto Scaling. Use Amazon Route 53 to point the application DNS record to the

Application Load Balancer.

B. Deploy a fleet of EC2 instances, doubling the current capacity, and place them behind an Application Load Balancer.

Increase the Amazon DynamoDB read and write capacity units. Add an alias record that contains the Application Load

Balancer endpoint to the existing Amazon Route 53 DNS record that points to the application.

C. Configure Amazon CloudFront and have its origin point to Amazon S3 to host the web application. Implement

Amazon DynamoDB Auto Scaling. Use Amazon Route 53 to point the application DNS record to the CloudFront DNS

name.

D. Use AWS Elastic Beanstalk with a custom AMI including all web components. Deploy the platform by using an Auto

Scaling group behind an Application Load Balancer across multiple Availability Zones. Implement Amazon DynamoDB

Auto Scaling. Use Amazon Route 53 to point the application DNS record to the Elastic Beanstalk load balancer.

Correct Answer: D

QUESTION 10

A company runs an application on Amazon EC2 instances behind an Application Load Balancer. The instances run in

an Amazon EC2 Auto Scaling group across multiple Availability Zones in us-east1. The application stores data in an

Amazon RDS MySQL Multi-AZ DB instance. A DevOps Engineer wants to modify the current solution and create a hot

standby of the environment in another region to minimize downtime if a problem occurs in us-east-1. Which combination

of steps should the DevOps Engineer take to meet these requirements? (Select THREE.)

A. Add a health check to the Amazon Route 53 alias record to evaluate the health of the primary region. Use AWS

Lambda, configured with an Amazon CloudWatch Events trigger, to elect the Amazon RDS master in the disaster

recovery region.

B. Create a new Application Load Balancer and Auto Scaling group in the disaster recovery region.

C. Extend the current Auto Scaling group to the subnets in the disaster recovery region.

D. Enable multi-region failover for the RDS configuration for the database instance.

E. Deploy a read replica of the RDS instance in the disaster recovery region.

F. Create an AWS Lambda function to evaluate the health of the primary region. If it fails, modify the Amazon Route 53

record to point at the disaster recovery region and elect the RDS master.

Correct Answer: BCE

QUESTION 11

A defect was discovered in production and a newsprint item has been created for deploying a hotfix.

However, any code change must go through the following steps before going into production:

Scan the code for security breaches, such as password and access key leaks.

Run the code through extensive, long-running unit tests.

Which source control strategy should a DevOps Engineer use in combination with AWS CodePipeline to

complete this process?

A. Create a hotfix tag on the last commit of the master branch. Trigger the development pipeline from the hotfix tag. Use

AWS CodeDeploy with Amazon ECS to do a content scan and run unit tests. Add a manual approval stage that merges

the hotfix tag into the master branch.

B. Create a hotfix branch from the master branch. Trigger the development pipeline from the hotfix branch. Use AWS

CodeBuild to do a content scan and run unit tests. Add a manual approval stage that merges the hotfix branch into the

master branch.

C. Create a hotfix branch from the master branch. Trigger the development pipeline from the hotfix branch. Use AWS

Lambda to do a content scan and run unit tests. Add a manual approval stage that merges the hotfix branch into the

master branch.

D. Create a hotfix branch from the master branch. Create a separate source stage for the hotfix branch in the production

pipeline. Trigger the pipeline from the hotfix branch. Use AWS Lambda to do a content scan and use AWS CodeBuild to

run unit tests. Add a manual approval stage that merges the hotfix branch into the master branch.

Correct Answer: A

QUESTION 12

A DevOps Engineer must create a Linux AMI in an automated fashion. The newly created AMI identification must be

stored in a location where other build pipelines can access the new identification programmatically What are the MOST

a cost-effective way to do this?

A. Build a pipeline in AWS CodePipeline to download and save the latest operating system Open Virtualization Format

(OVF) image to an Amazon S3 bucket then customizes the image using the guestfish utility. Use the virtual machine

(VM) import command to convert the OVF to an AMI, and store the AMI identification output as an AWS Systems

Manager parameter.

B. Create an AWS Systems Manager automation document with values instructing how the image should be created.

Then build a pipeline in AWS CodePipeline to execute the automation document to build the AMI when triggered. Store

the AMI identification output as a Systems Manager parameter.

C. Build a pipeline in AWS CodePipeline to take a snapshot of an Amazon EC2 instance running the latest version of

the application. Then start a new EC2 instance from the snapshot and update the running instance using an AWS

Lambda function. Take a snapshot of the updated instance, then convert it to an AMI. Store the AMI identification output

in an Amazon DynamoDB table.

D. Launch an Amazon EC2 instance and install Packer. Then configure a Packer build with values defining how the

image should be created. Build a Jenkins pipeline to invoke the Packer build when triggered to build an AMI. Store the

AMI identification output in an Amazon DynamoDB table.

Correct Answer: D

QUESTION 13

A DevOps engineer is deploying a new version of a company\\’s application in an AWS CodeDeploy deployment group

associated with its Amazon EC2 instances. After some time, the deployment fails. The engineer realizes that all the

events associated with the specific deployment ID are in a Skipped status, and code was not deployed in the instances

associated with the deployment group.

What are valid reasons for this failure? (Choose two.)

A. The networking configuration does not allow the EC2 instances to reach the internet via a NAT gateway or internet

gateway and the CodeDeploy endpoint cannot be reached.

B. The IAM user who triggered the application deployment does not have permission to interact with the CodeDeploy

endpoint.

C. The target EC2 instances were not properly registered with the CodeDeploy endpoint.

D. An instance profile with proper permissions was not attached to the target EC2 instances.

E. The appspec.yml file was not included in the application revision.

Correct Answer: BC

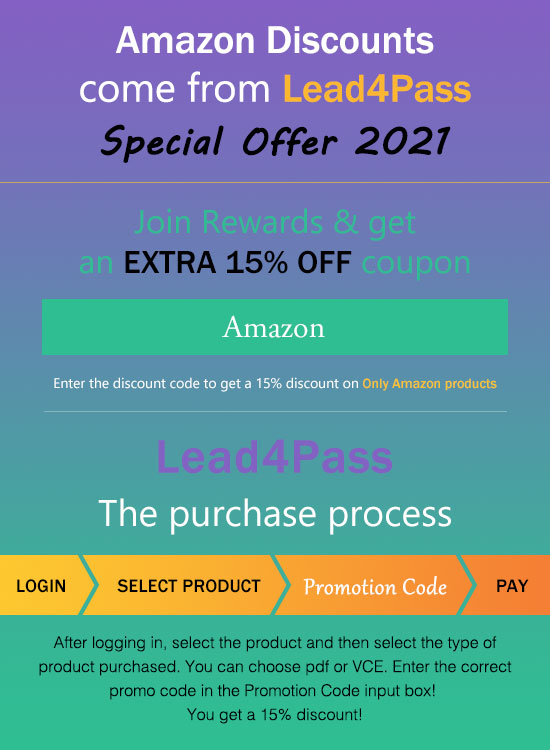

leads4pass Amazon Discount Code 2021

For the full Amazon DOP-C01 exam dumps from leads4pass DOP-C01 Dumps pdf or Dumps VCE visit: https://www.leads4pass.com/aws-devops-engineer-professional.html (Q&As: 449 dumps)

ps.

Get free Amazon DOP-C01 dumps PDF online: https://drive.google.com/file/d/1CxJ0SUwQGHmG0XpVDqLLyo4gU07s9uYH/